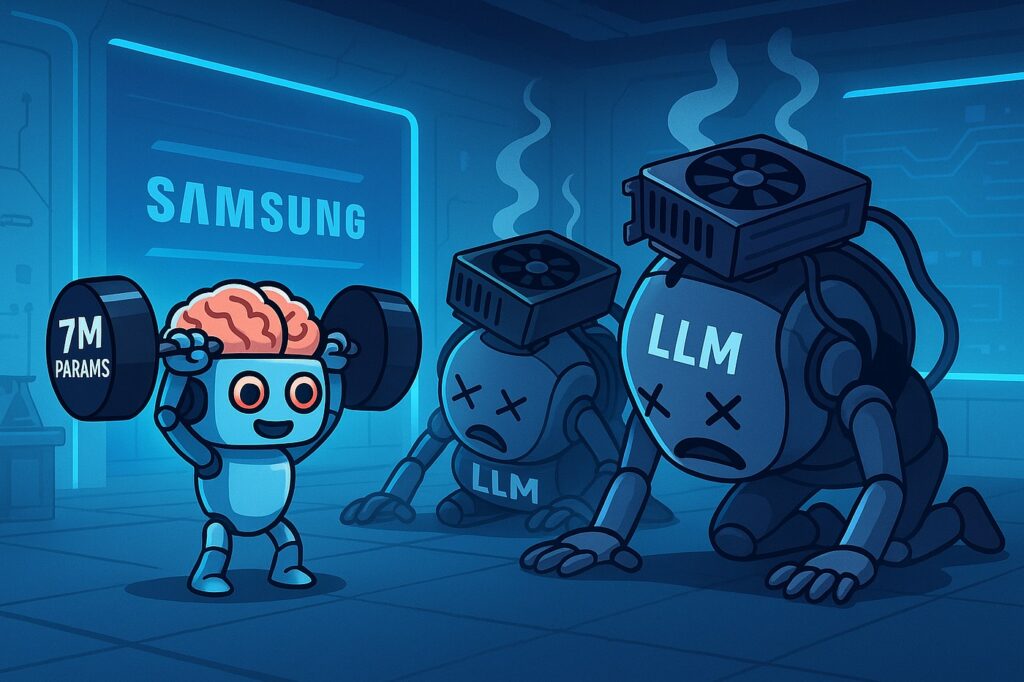

A new paper from a Samsung AI researcher describes how a small network can outperform gigantic Large Language Models (LLMs) at complex reasoning.

In the contest to build the world’s most powerful computers, big has always been a reliable stand-in for fast. It’s no secret that tech behemoths have spent billions developing models of ever-more staggering proportions. Still, Alexia Jolicoeur-Martineau from Samsung SAIL Montréal believes an entirely new, more efficient approach can be developed with the Tiny Recursive Model (TRM).

With only 7 million parameters, which is less than 0.01% the size of current state-of-the-art LLMs, TRM achieves new state-of-the-art results on notoriously challenging benchmarks, including the ARC-AGI intelligence test. Samsung’s effort is a direct assault on the accepted wisdom that only size matters when advancing AI model capabilities, presenting a more sustainable, parameter-efficient alternative.

Overcoming the limits of scale

Though LLMs have demonstrated impressive capabilities in generating human-like text, they can be fragile when performing complex, multi-step reasoning. Since they produce answers token-by-token, a single mistake in the chain affects all downstream outputs, causing an incorrect final output.

Techniques like Chain-of-Thought, where a model “thinks out loud” to unravel a problem, have been attempted in response. But these methods are usually computationally costly, so it is often necessary to manage large amounts of reasoning data, the quality of which can be far from perfect, leading to incorrect logic. Accordingly, even with these extensions, the LLM still struggles to solve puzzles that require perfect logical reasoning.

Samsung’s research is based on a new AI model called the Hierarchical Reasoning Model (HRM). We tested two new networks introduced by HRM, both of which were performing a recursive function at different speeds to improve the answer. It seemed quite powerful but was also complicated; it hinged on speculative biological arguments and complex fixed-point theorems that were not necessarily relevant.

Instead of HRM’s two networks, TRM employs a single, small network that iteratively refines both its internal “reasoning” and its predicted “answer”.

The model is fed with the question, an initial answer guess and a kernel latent reasoning feature. It first iterates over a few steps to condition its latent reasoning on all three inputs. Then, based on this improved logic, it revises its prediction for the final answer. This whole procedure can be iterated up to 16 times, thus allowing the model to progressively self-correct mistakes in a parameter-efficient way.

A surprising finding of the study was that even a tiny network with only two layers generalised across data sets much better than any four-layer version. This model shrinkage seems to prevent overfitting, which is often the case when you train deep models on small datasets.

TRM also (and its good guys being even worse than the baddies makes for a rewarding shift) ditches the abstruse maths that underpinned its precursor. The original HRM model could only be fully justified at the regime acceptance by assuming that its functions tended to a fixed point.

TRM circumvents this altogether by back-propagating directly through its entire recursion. Even this simple modification alone led to a massive leap in performance, rising from 56.5% to 87.4% accuracy on the Sudoku-Extreme benchmark during an ablation study.

Samsung’s model crushes AI benchmarks using less computing power

The results speak for themselves. Our result on the challenging Sudoku-Extreme dataset, which has only 1,000 training examples, is 87.4% Test accuracy, which is a remarkable improvement over HRM’s 55%. On Maze-Hard, a long path-finding task over 30×30 mazes, TRM scores 85.3% against HRM’s 74.5%.

Most importantly, TRM takes significant leaps on the Abstraction and Reasoning Corpus (ARC-AGI), a benchmark designed to test accurate fluid intelligence in AI. Although there are only 7M parameters, TRM is already powerful, achieving accuracies of 44.6%/7.8% on ARC-AGI-1/ARC-AGI-2, respectively. It performs better than HRM, which employs a 27M-parameter model, and surpasses many of the world’s largest LLMs. By contrast, Gemini 2.5 Pro scores only 4.9% on ARC-AGI-2.

The training of TRM has also been accelerated. An adaptive mechanism, known as ACT, that learns when the model has “considered” an answer for long enough and can pass it on to another data sample, has been simplified, so that a second, expensive forward pass through the network is no longer required at each training step. This decision was taken at the expense of a minimal difference in final generalisation.

This research from Samsung is a perfect piece to argue against the trend of making AI models ever larger. It demonstrates that, with architectures capable of iterative reasoning and self-correction, we can solve very hard problems using a minuscule fraction of computational resources.